The big concept introduced in Chapter 2.8 was the rank of a matrix, , which is the number of linearly independent columns of a matrix. The rank of a matrix told us the dimension of its column space, , which is the set of all vectors that can be created with linear combinations of ’s columns.

The ideas of rank, column space, and linear independence are all closely related, and also all apply for matrices of any shape – wide, tall, or square. This is good, because data from the real world is rarely square: we usually have many more observations () than features ().

The big idea in Chapter 2.9 is that of the inverse of a matrix, , which only applies to square matrices. But, inverses and square matrices can still be useful for our rectangular matrices with real data, since the matrix is square, no matter the shape of . And, as we discussed in Chapter 2.8 and you’re seeing in Homework 5, the matrix has close ties to the correlations between the columns of , which you already know has some connection to linear regression.

Motivation: What is an Inverse?¶

Scalar Addition and Multiplication¶

In “regular” addition and multiplication, you’re already familiar with the idea of an inverse. In addition, the inverse of a number is another number that satisfies

0 is the “identity” element in addition, since adding it to any number doesn’t change the value of . Of course, , so is the additive inverse of . Any number has an additive inverse; for example, the additive inverse of 2 is -2, and the additive inverse of -2 is 2.

In multiplication, the inverse of a number is another number that satisfies

1 is the “identity” element in multiplication, since multiplying it by any number doesn’t change the value of . Most numbers have a multiplicative inverse of , but 0 does not!

is not achieved by any .

When a multiplicative inverse exists, we can use it to solve equations like

by multiplying both sides by the multiplicative inverse of 2, which is .

Matrices¶

If is an matrix, its additive inverse is just , which comes from negating each element of . That part is not all that interesting. What we’re more interested in is the multiplicative inverse of a matrix, which is just referred to as the inverse of the matrix.

Suppose is some matrix. We’d like to find an inverse, , such that when is multiplied by in any order, the result is the identity element for matrix multiplication, . Recall, the identity matrix is a matrix with 1s on the diagonal (moving from the top left to the bottom right), and 0s everywhere else. The identity matrix is

for any , and for any .

If is an matrix, we’d need a single matrix that satisfies

In , is the right inverse of , and in , is the left inverse of . Right now, we’re interested in finding matrices that have both a left and right inverse, that happen to be the same matrix.

In order to evaluate , we’d need to be of shape , and in order to evaluate , we’d need to be of shape . The solution to these constraints is to have be a matrix (like ).

If we try that, then

has shape

has shape

but, we want and to both be the same matrix, not just separate valid matrices. So, we need , which requires to be , like its inverse.

For example, consider the matrix

It turns out that does have an inverse! Its inverse, denoted by , is

Because is the matrix that satisfies both

and

numpy is good at finding inverses, when they exist.

import numpy as np

A = np.array([[2, 4],

[3, 5]])

np.linalg.inv(A)array([[-2.5, 2. ],

[ 1.5, -1. ]])# The top-left and bottom-right elements are 1s,

# and the top-right and bottom-left elements are 0s.

# 4.4408921e-16 is just 0, with some floating point error baked in.

A @ np.linalg.inv(A)array([[1.0000000e+00, 0.0000000e+00],

[4.4408921e-16, 1.0000000e+00]])But, not all square matrices are invertible, just like not all numbers have multiplicative inverses. What happens if we try and invert

B = np.array([[1, 2],

[2, 4]])

np.linalg.inv(B)---------------------------------------------------------------------------

LinAlgError Traceback (most recent call last)

Cell In[17], line 4

1 B = np.array([[1, 2],

2 [2, 4]])

----> 4 np.linalg.inv(B)

File ~/miniforge3/envs/pds/lib/python3.10/site-packages/numpy/linalg/linalg.py:561, in inv(a)

559 signature = 'D->D' if isComplexType(t) else 'd->d'

560 extobj = get_linalg_error_extobj(_raise_linalgerror_singular)

--> 561 ainv = _umath_linalg.inv(a, signature=signature, extobj=extobj)

562 return wrap(ainv.astype(result_t, copy=False))

File ~/miniforge3/envs/pds/lib/python3.10/site-packages/numpy/linalg/linalg.py:112, in _raise_linalgerror_singular(err, flag)

111 def _raise_linalgerror_singular(err, flag):

--> 112 raise LinAlgError("Singular matrix")

LinAlgError: Singular matrixWe’re told the matrix is singular, meaning that it’s not invertible.

The point of Chapter 2.9 is to understand why some square matrices are invertible and others are not, and what the inverse of an invertible matrix really means.

What’s the Point?¶

In general, suppose is an matrix and . Then, the equation

is a system of equations in unknowns.

There may be no solution, a unique solution, or infinitely many solutions for the vector that satisfies the system, depending on and whether is in .

If we assume is square, i.e. , then is a system of equations in unknowns. If is invertible (which, remember, only square matrices can be), then there is a unique solution to the system, and we can find it by multiplying both sides by on the left.

is the unique solution to the system of equations, and we can find it without having to manually solve the system. Thinking back to the example above, we used numpy to find that the inverse of

is

We can use to solve the system

For any , the unique solution for and is

That said, as we’ll discuss at the bottom of this section, actually finding the inverse of a matrix is very computationally intensive, so usually we don’t actually compute . Knowing that it exists, and understanding the properties it satisfies, is the important part.

Linear Transformations¶

But first, I want us to think about matrix-vector multiplication as something more than just number crunching.

In Chapter 2.8, the running example was the matrix

To multiply by a vector on the right, that vector must be in , and the result will be a vector in .

Put another way, if we consider the function , maps elements of to elements of , i.e.

I’ve chosen the letter to denote that is a linear transformation.

Every linear transformation is of the form . For our purposes, linear transformations and matrix-vector multiplication are the same thing, though in general linear transformations are a more abstract concept (just like how vector spaces can be made up of functions, for example).

For example, the function

is a linear transformation from to , and is equivalent to

The function is also a linear transformation, from to .

A non-example of a linear transformation is

because no matrix multiplied by will produce .

Another non-example, perhaps surprisingly, is

This is the equation of a line in , which is linear in some sense, but it’s not a linear transformation, since it doesn’t satisfy the two properties of linearity. For to be a linear transformation, we’d need

for any . But, if we consider and as an example, we get

which are not equal. is an example of an affine transformation, which in general is any function that can be written as , where is an matrix and .

Activity 1

Activity 1.1

True or false: if is a linear transformation, then .

Activity 1.2

For each of the following functions, determine whether it is a linear transformation. If it is, write it in the form . If it is not, explain why not.

takes , multiplies the first component by 2 and second component by 3, and returns the difference between the two, as a scalar.

Solutions

Activity 1.1

True or false: if is a linear transformation, then .

True. Since is a linear transformation, we have

for any and . Plugging in , we get

Activity 1.2

is a linear transformation.

is a linear transformation.

is not a linear transformation. Notice the constant term of -5 in the first component! This is instead an affine transformation.

is not a linear transformation once again; it’s an affine transformation. If you don’t believe that it’s not a linear transformation, plug in both and ; if were a linear transformation, the latter output would be exactly double the former output.

This description tells us that , and this is a perfectly valid linear transformation, from to .

From to ¶

While linear transformations exist from to or to , it’s in some ways easiest to think about linear transformations with the same domain and codomain, i.e. transformations of the form . This will allow us to explore how transformations stretch, rotate, and reflect vectors in the same space. Linear transformations with the same domain () and codomain () are represented by matrices, which gives us a useful setting to think about the invertibility of square matrices, beyond just looking at a bunch of numbers.

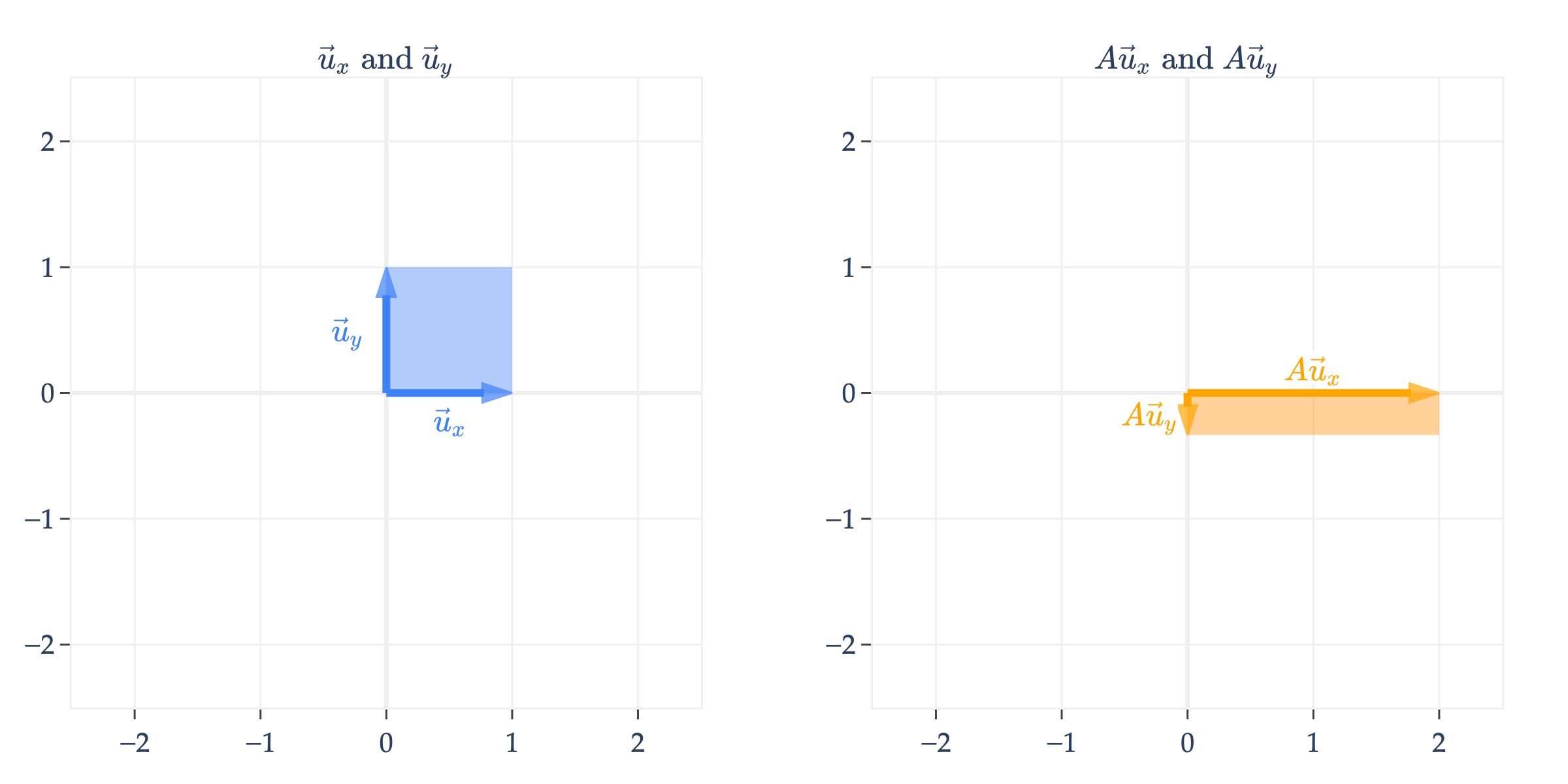

To start, let’s consider the linear transformation defined by the matrix

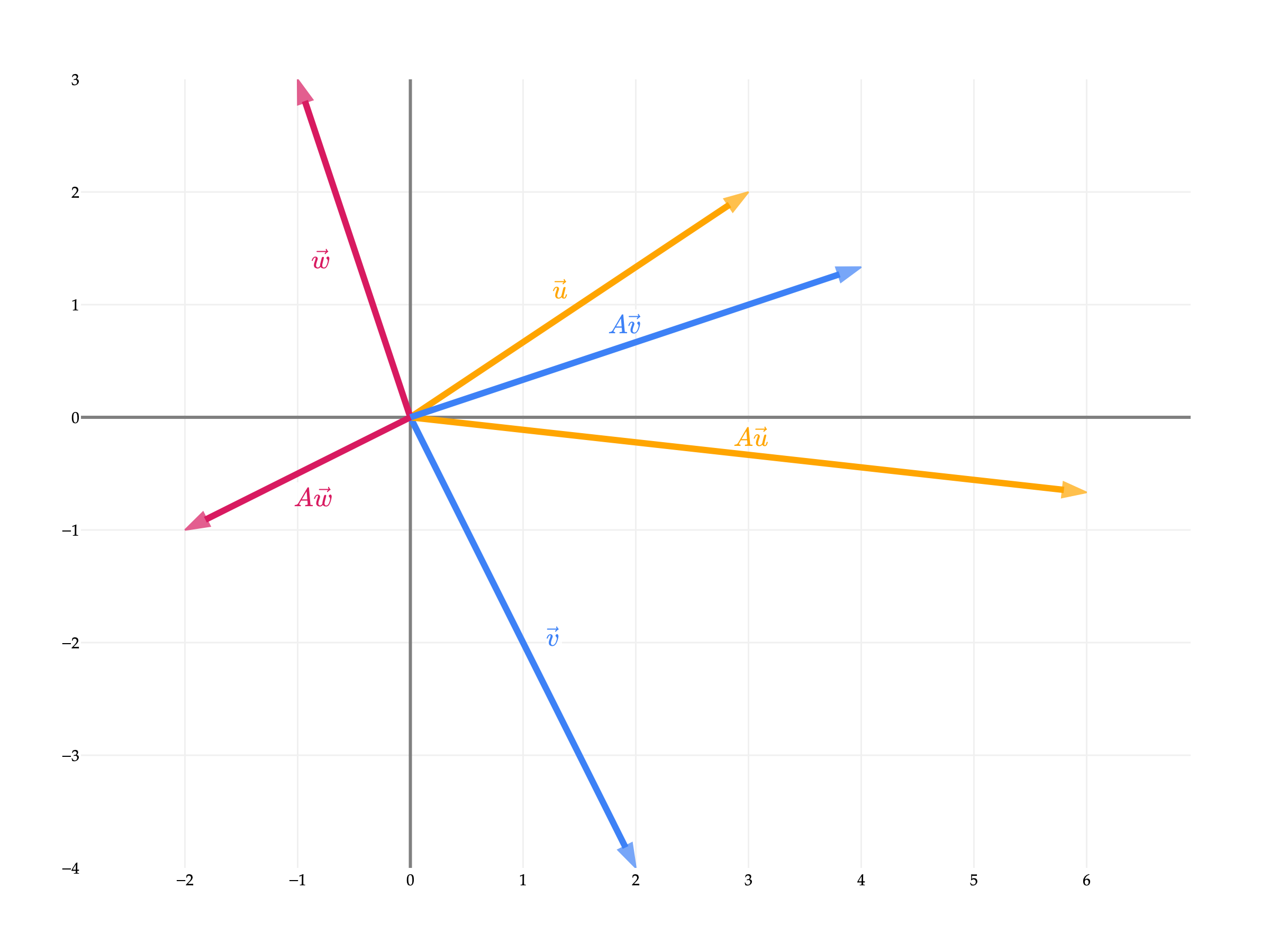

What happens to a vector in when we multiply it by ? Let’s visualize the effect of on several vectors in .

scales, or stretches, the input space by a factor of 2 in the -direction and a factor of in the -direction.

Scaling¶

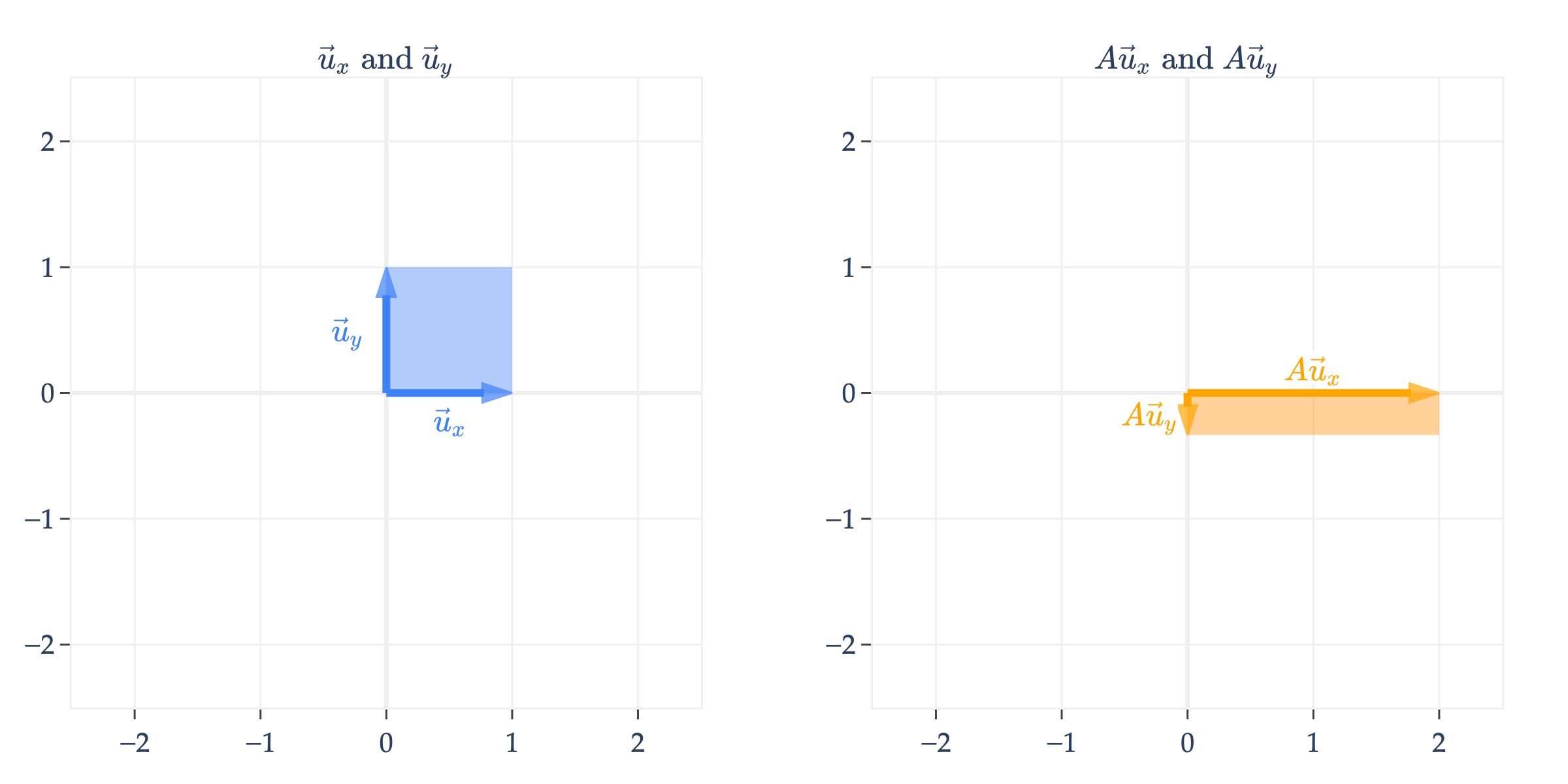

Another way of visualizing is to think about how it transforms the two standard basis vectors of , which are

(In the past I’ve called these and , but I’ll use and here since I’ll also use to represent a matrix shortly.)

Note that is just the first column of , and similarly is the second column of .

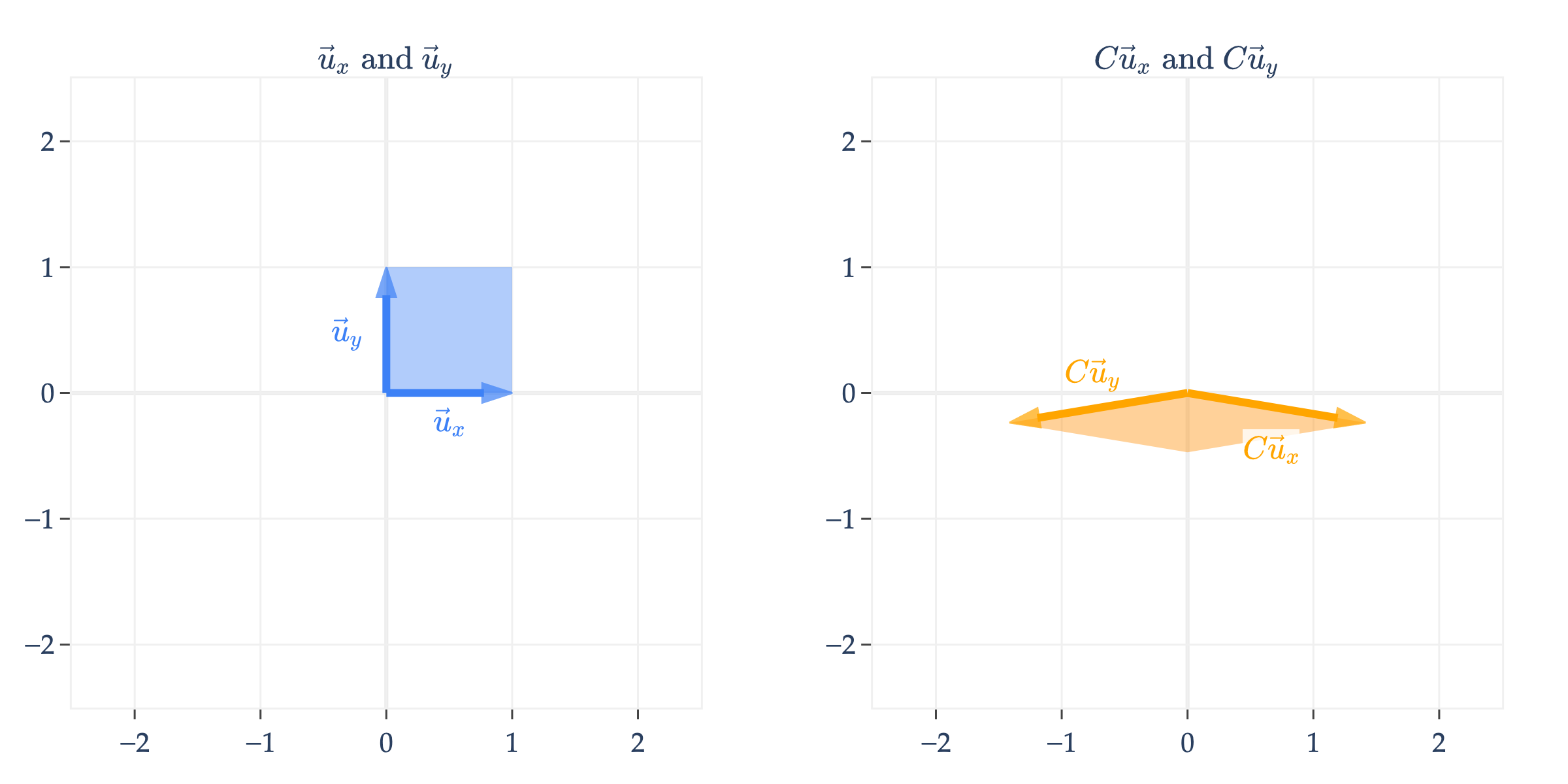

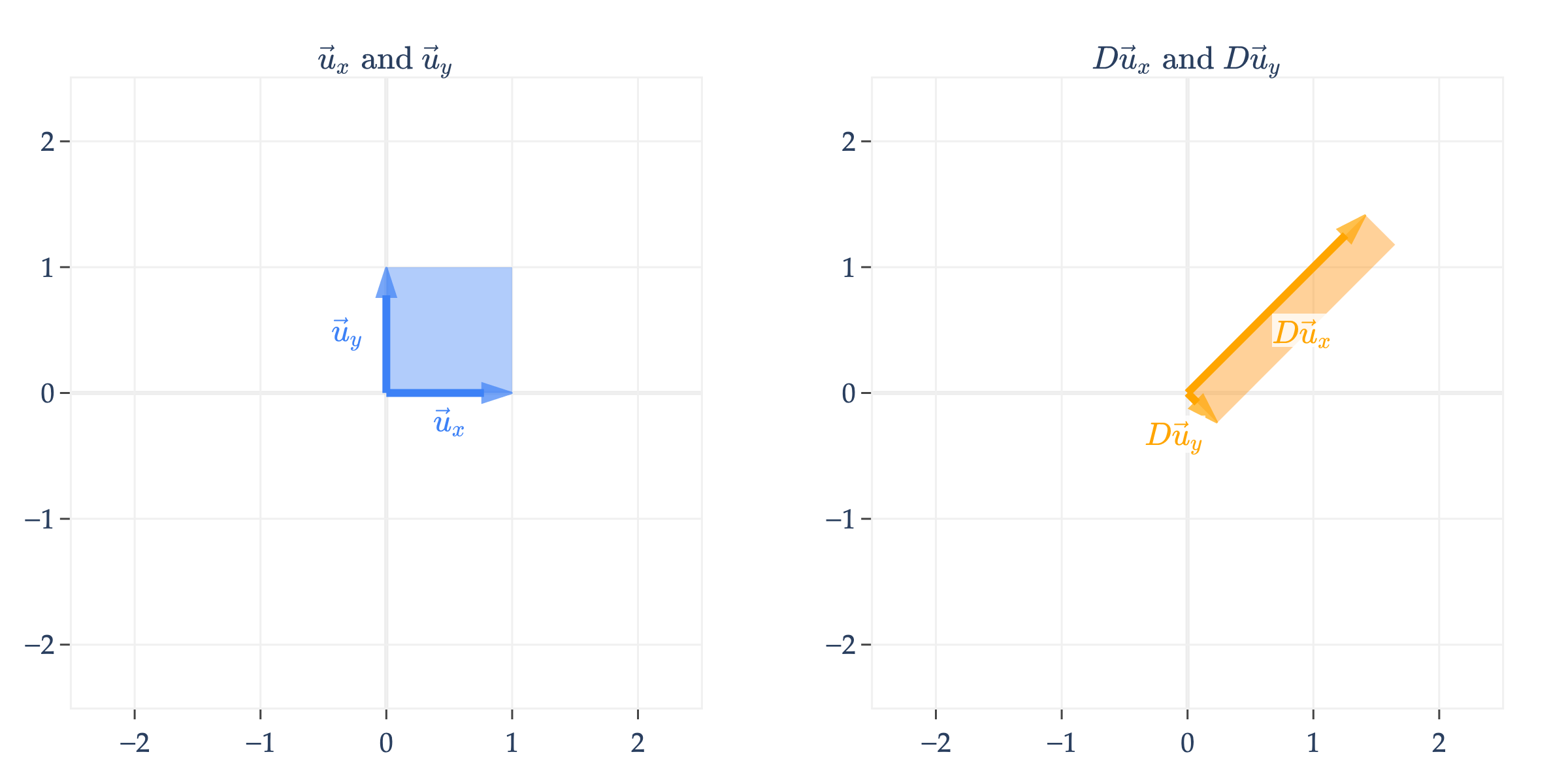

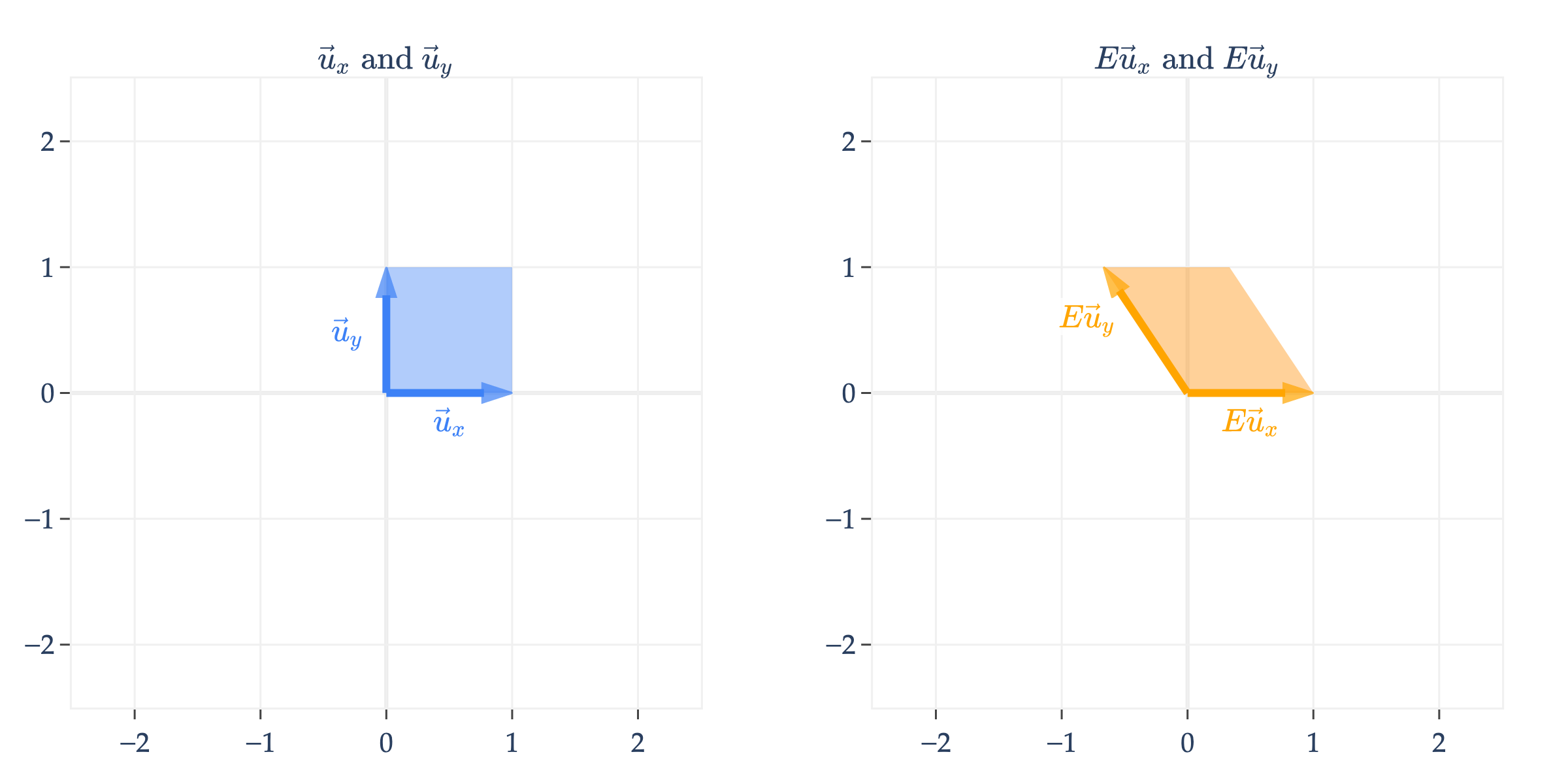

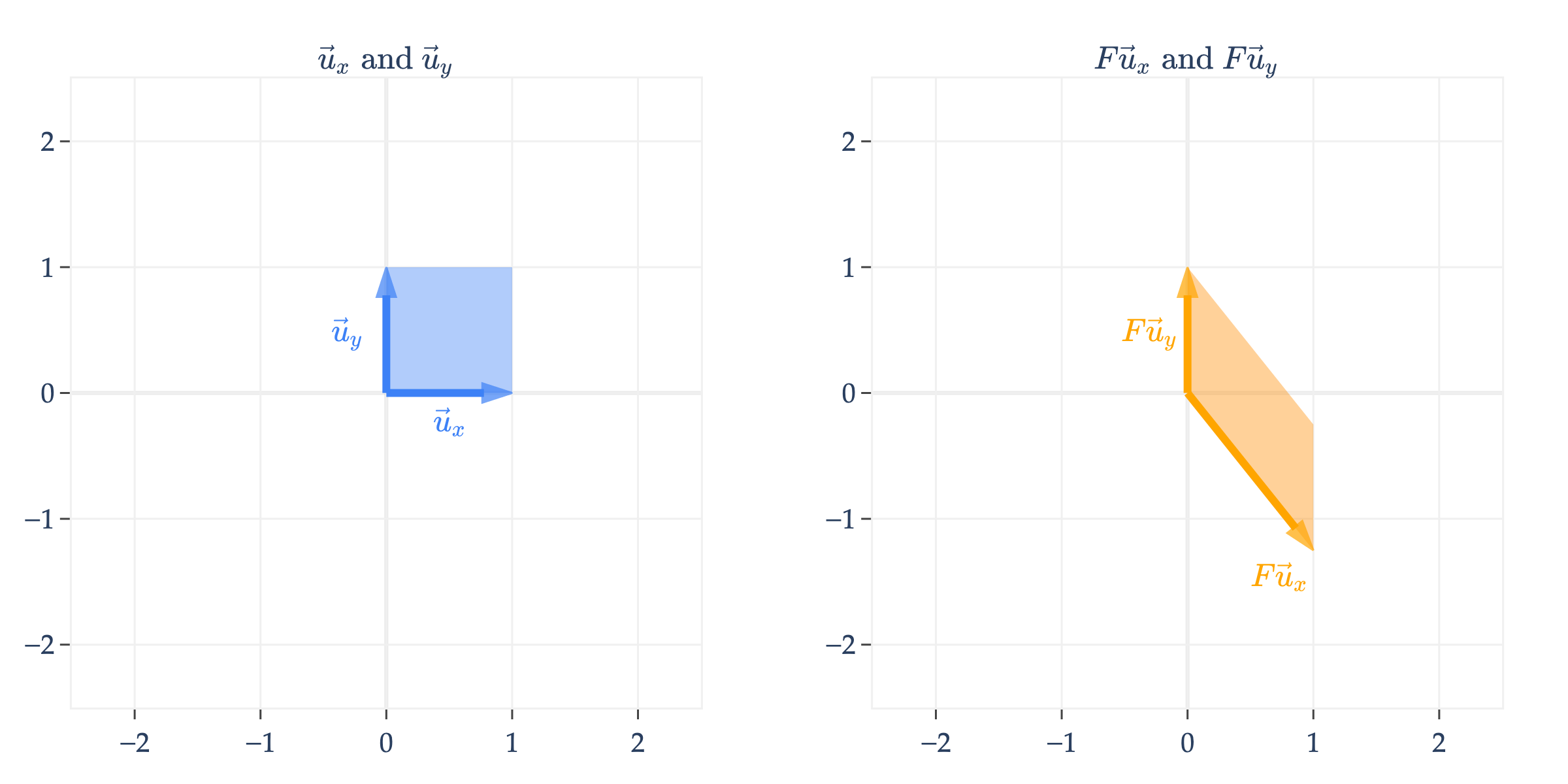

In addition to drawing and on the left and their transformed counterparts and on the right, I’ve also shaded in how the unit square, which is the square containing and , gets transformed. Here, it gets stretched from a square to a rectangle.

Remember that any vector is a linear combination of and . For instance,

So, multiplying by is equivalent to multiplying by a linear combination of and .

and the result is a linear combination of and with the same coefficients! If this sounds confusing, just remember that and are just the first and second columns of , respectively.

So, as we move through the following examples, think of the transformed basis vectors and as a new set of “building blocks” that define the transformed space (which is the column space of ).

is a diagonal matrix, which means it scales vectors. Note that any vector in can be transformed by , not just vectors on or within the unit square; I’m just using these two basis vectors to visualize the transformation.

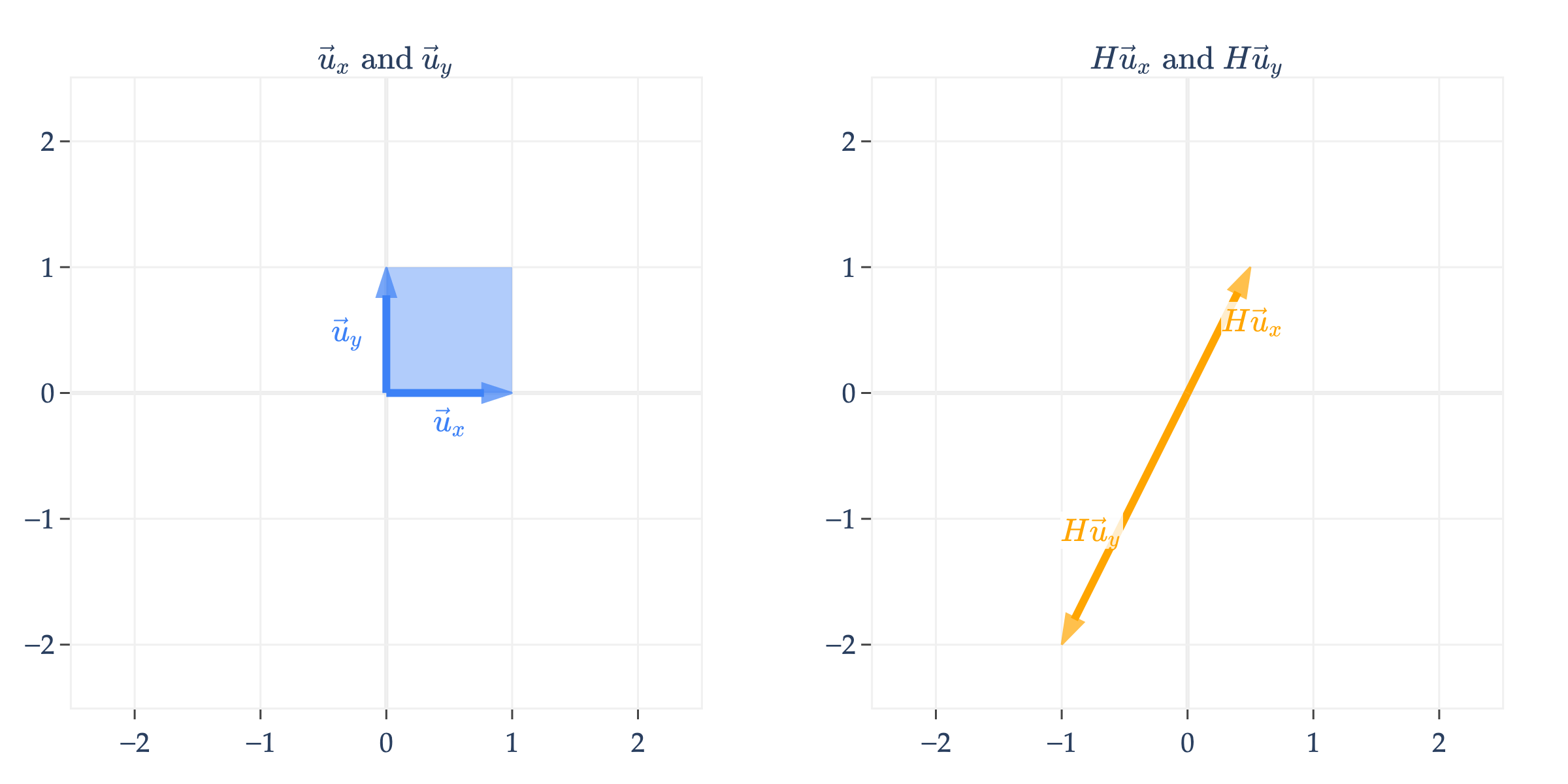

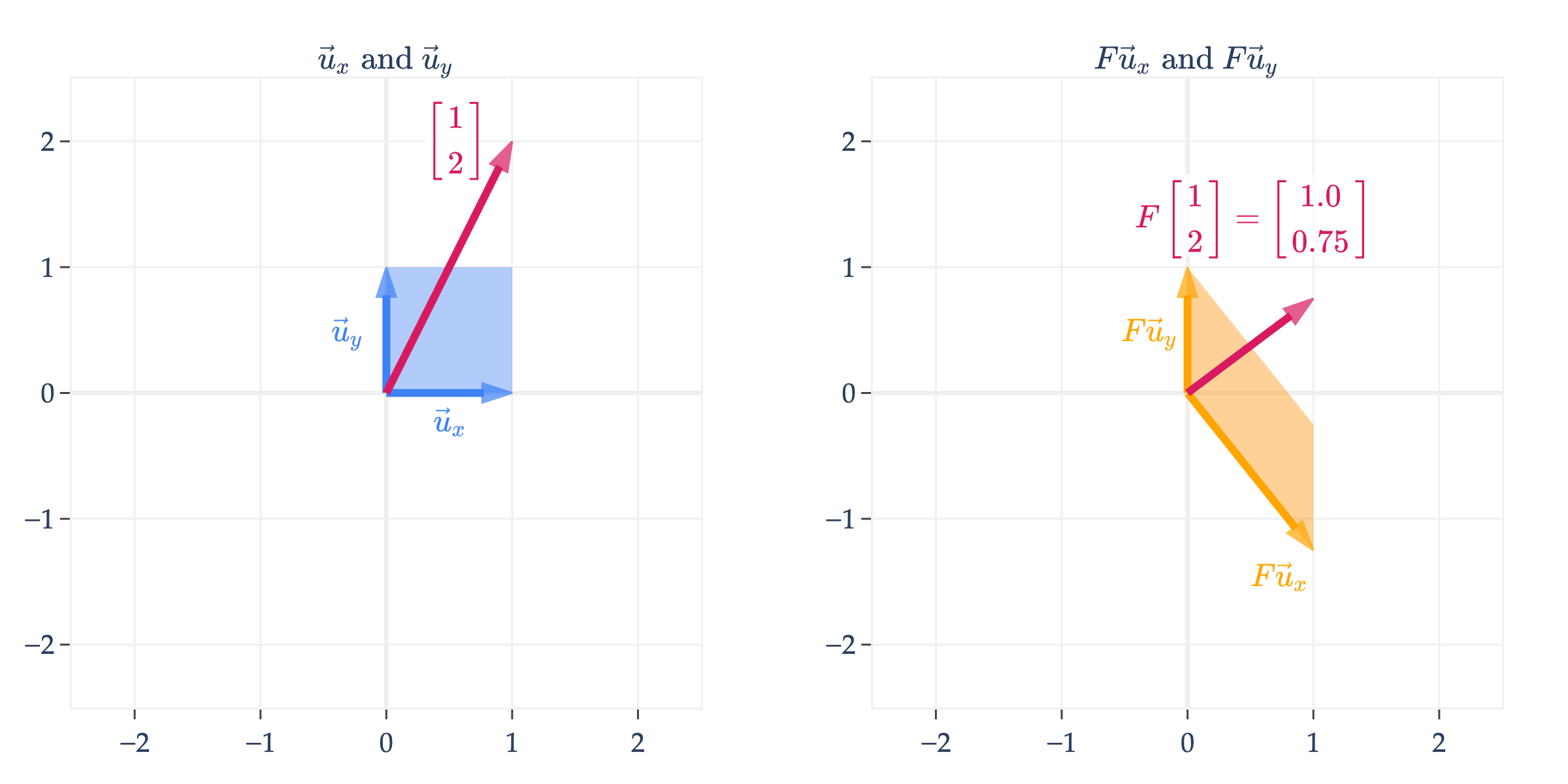

Rotations and Orthogonal Matrices¶

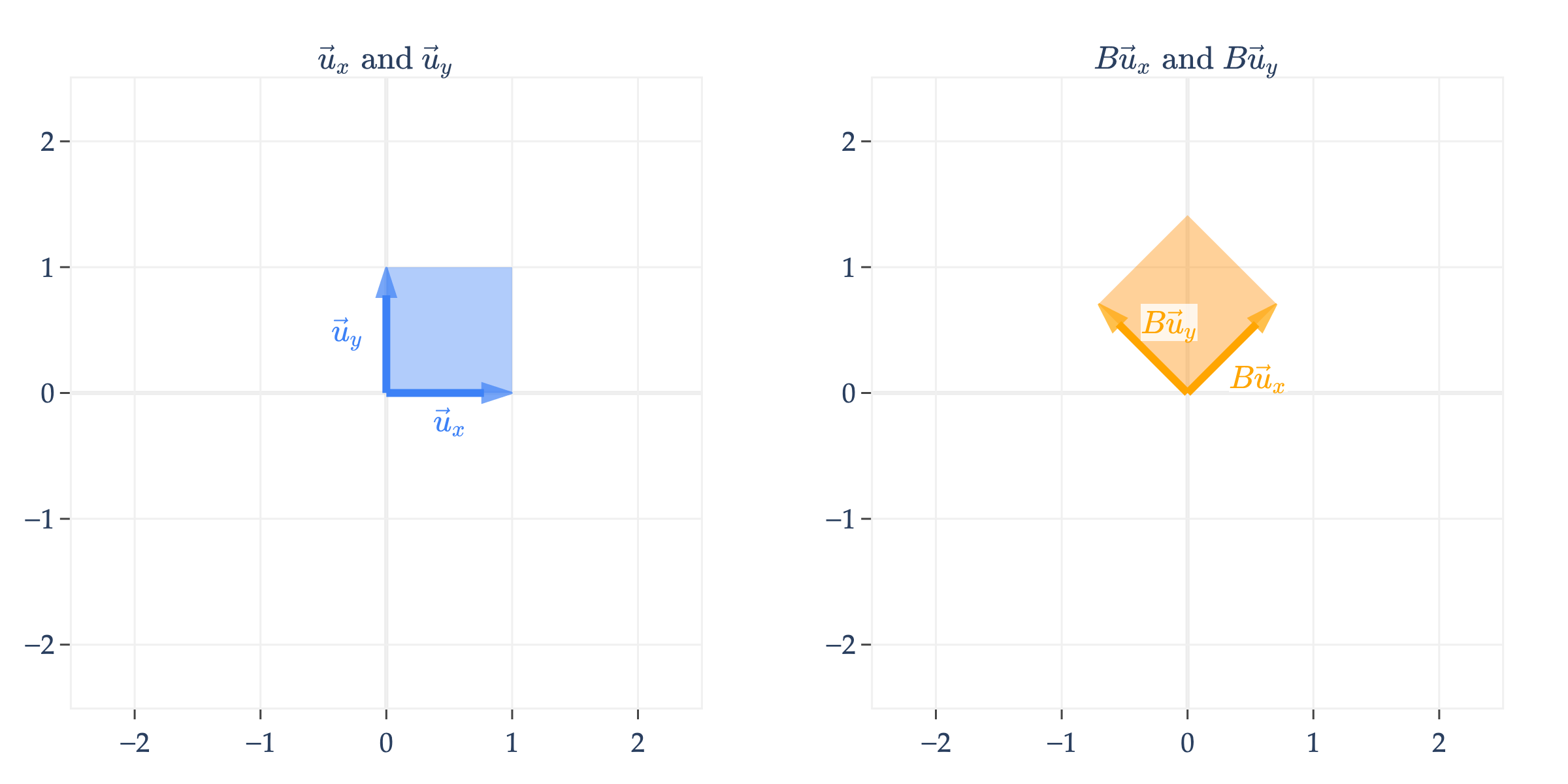

What might a non-diagonal matrix do? Let’s consider

Just to continue the previous example, the vector is transformed into

is an orthogonal matrix, which means that its columns are unit vectors and are orthogonal to one another.

Orthogonal matrices rotate vectors in the input space. In general, a matrix that rotates vectors by (radians) counterclockwise in is given by

rotates vectors by radians, i.e. .

Rotations are more difficult to visualize in and higher dimensions, but in Homework 5, you’ll prove that orthogonal matrices preserve norms, i.e. if is an orthogonal matrix and , then . So, even though an orthogonal matrix might be doing something harder to describe in , we know that it isn’t changing the lengths of the vectors it’s multiplying.

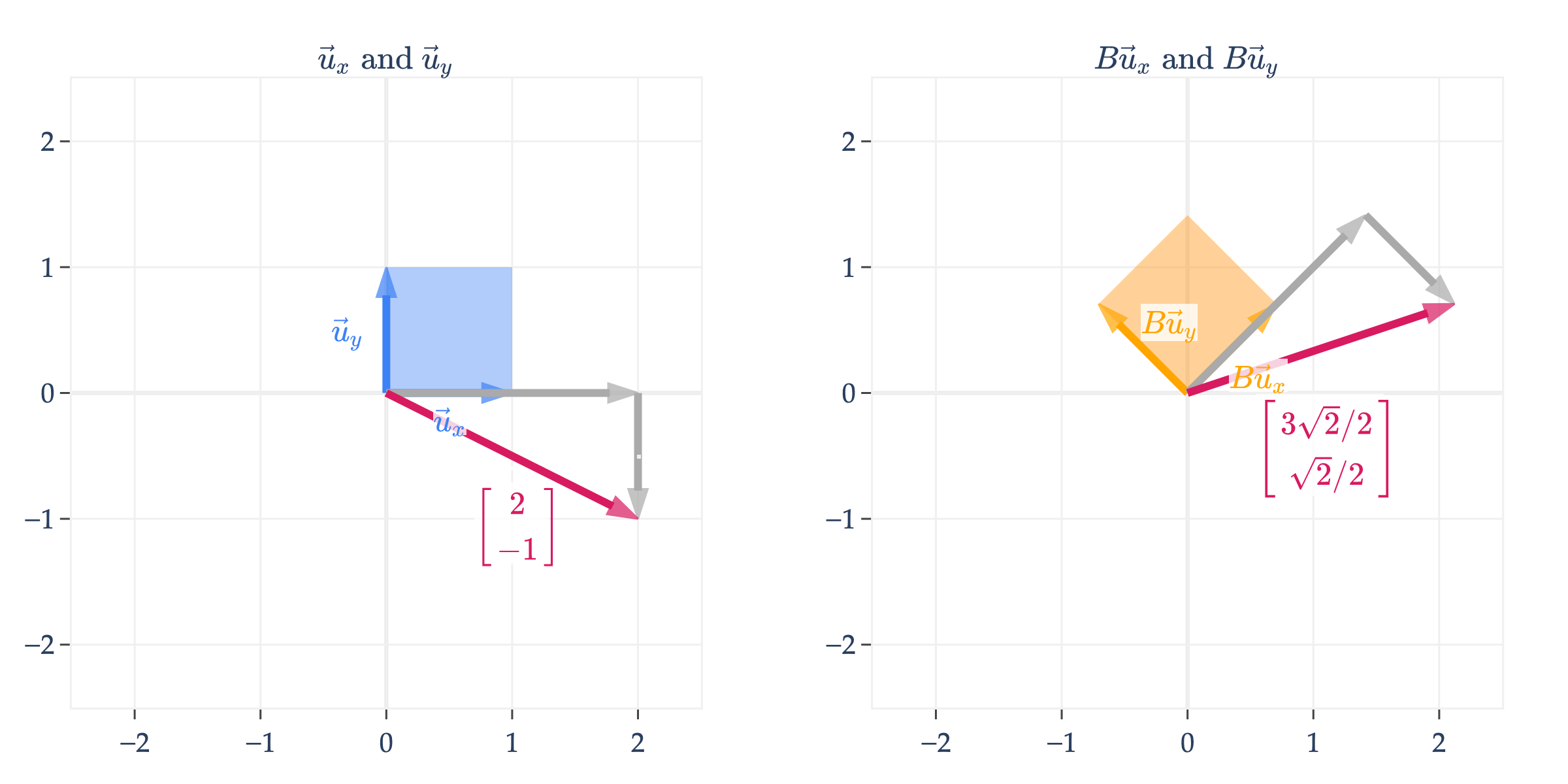

To drive home the point I made earlier, any vector , once multiplied by , ends up transforming into

Composing Transformations¶

We can even apply multiple transformations one after another. This is called composing transformations. For instance,

is just

Note that rotates the input vector, and then scales it. Read the operations from right to left, since .

is different from

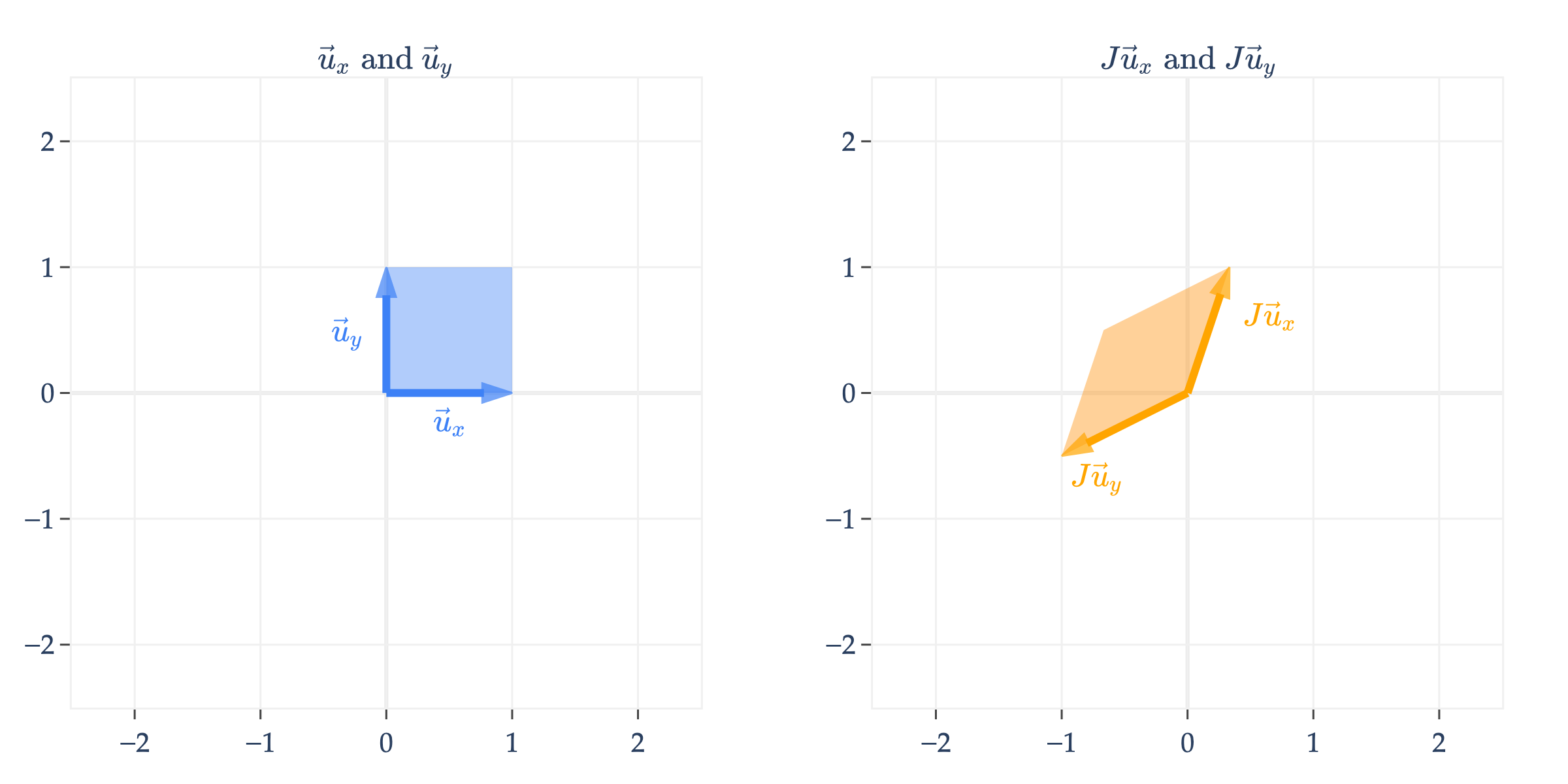

Shears¶

is a shear matrix. Think of a shear as a transformation that slants the input space along one axis, while keeping the other axis fixed. What helps me interpret shears is looking at them formulaically.

Note that the -coordinate of input vectors in remain unchanged, while the -coordinate is shifted by , which results in a slanted shape.

Similarly, is a shear matrix that keeps the -coordinate fixed, but shifts the -coordinate, resulting in a slanted shape that is tilted downwards.

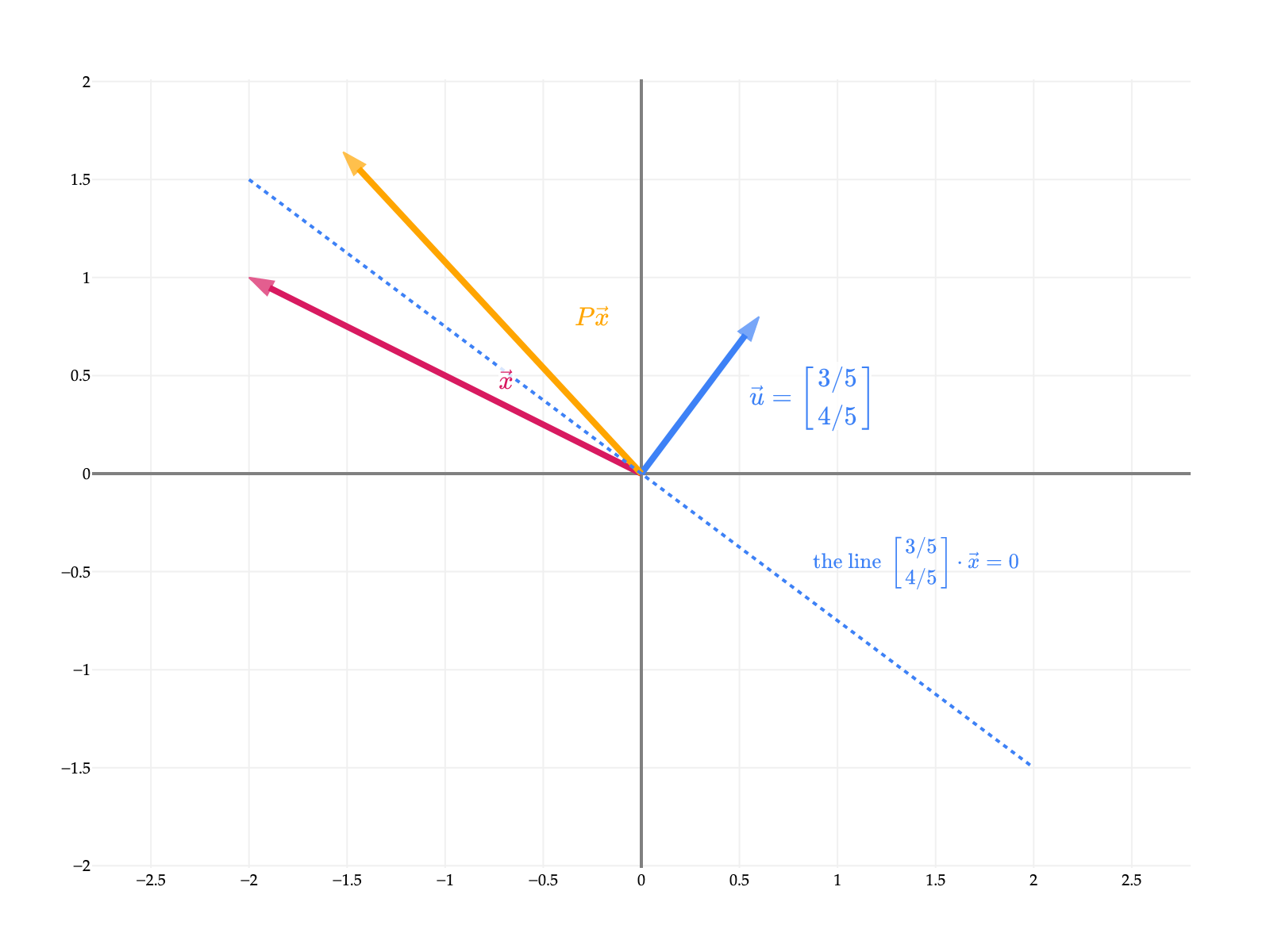

Projections¶

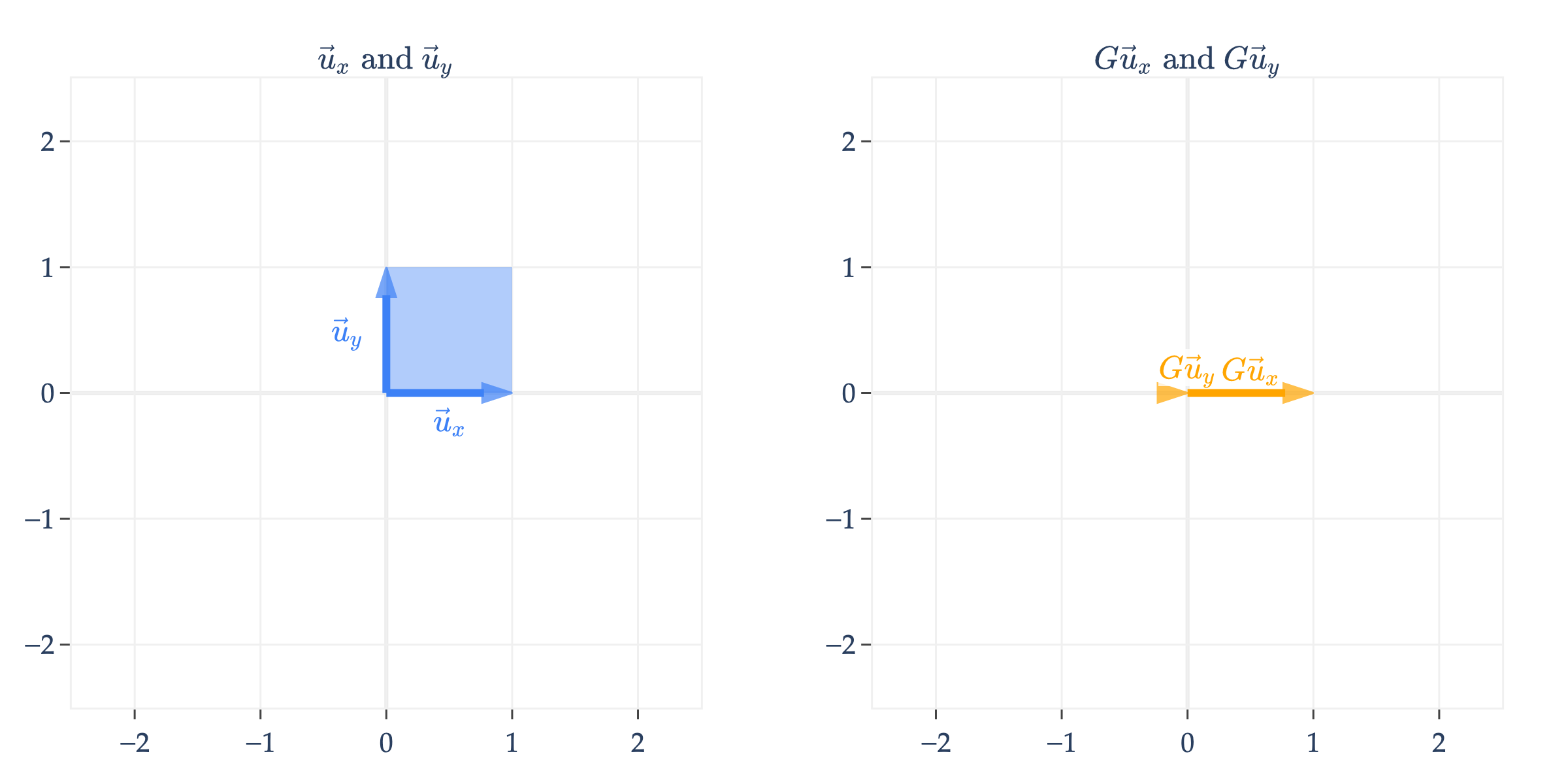

So far we’ve looked at scaling, rotation, and shear matrices. Yet another type is a projection matrix.

projects onto the -axis and throws away the -coordinate. Note that maps the unit square to a line, not another four-sided shape.

You might also notice that, unlike the matrices we’ve seen so far, is not all of , but rather it’s just a line in , since ’s columns are not linearly independent.

below works similarly.

is the line spanned by , so will always be some vector on this line.

Put another way, if , then is

but since and are both on the line spanned by , is really just a scalar multiple of .

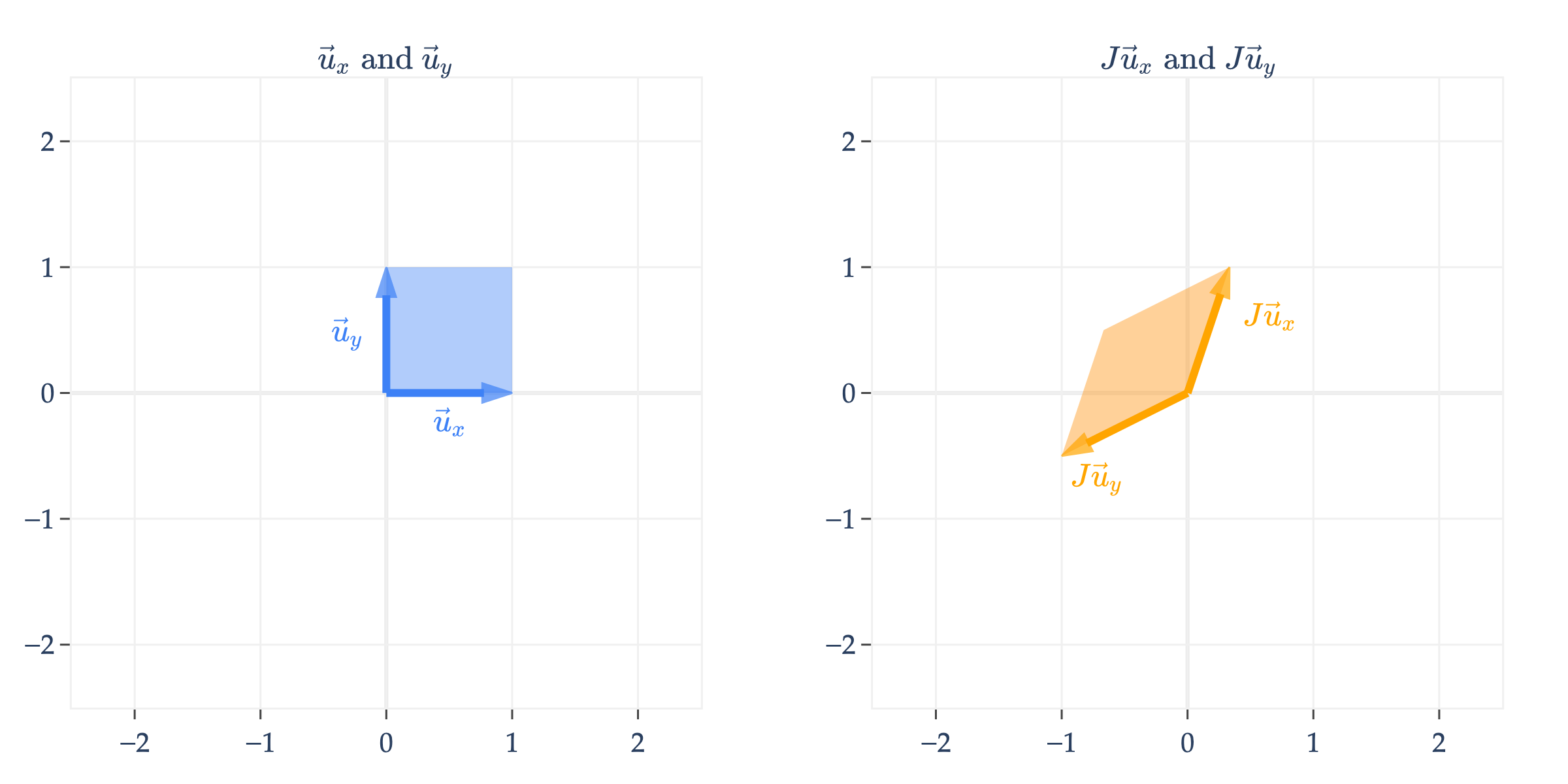

Arbitrary Matrices¶

Finally, I’ll comment that not all linear transformations have a nice, intuitive interpretation. For instance, consider

turns the unit square into a parallelogram. In fact, so did , , , , , and ; all of these transformations map the unit square to a parallelogram, with some additional properties (e.g. ’s parallelogram was a rectangle, ’s had equal sides, etc.).

There’s no need to memorize the names of these transformations – after all, they only apply in and perhaps where we can visualize.

Speaking of , an arbitrary matrix can be thought of as a transformation that maps the unit cube to a parallelepiped (the generalization of a parallelogram to three dimensions).

What do you notice about the transformation defined by , and how it relates to ’s columns? (Drag the plot around to see the main point.)

Since ’s columns are linearly dependent, maps the unit cube to a flat parallelogram.

The Determinant¶

It turns out that there’s a formula for

area of the parallelogram formed by transforming the unit square by a matrix

volume of the parallelepiped formed by transforming the unit cube by a matrix

in general, the -dimensional “volume” of the object formed by transforming the unit -cube by an matrix

That formula is called the determinant of , and is denoted .

Why do we care? Remember, the goal of this section is to find the inverse of a square matrix , if it exists, and the determinant will give us one way to check if it does.

In the case of the projection matrices and above, we saw that their columns were linearly dependent, and so the transformations and mapped the unit square to a line with no area. Similarly above, mapped the unit cube to a flat parallelogram with no volume. In all other transformations, the matrices’ columns were linearly independent, so the resulting object had a non-zero area (in the case of matrices) or volume (in the case of matrices).

So, how do we find ? Unfortunately, the formula is only convenient for matrices.

For example, in the transformation

the area of the parallelogram formed by transforming the unit square is

Note that a determinant can be negative! So, to be precise, describes the -dimensional volume of the object formed by transforming the unit -cube by .

The sign of the determinant depends on the order of the columns of the matrix. For example, swap the columns of and its determinant would be . (If is “to the right” of , the determinant is positive, like with the standard basis vectors; if is “to the left” of , the determinant is negative.)

The determinant of an matrix can be expressed recursively using a weighted sum of determinants of smaller matrices, called minors. For example, if is the matrix

then is

The matrix is a minor of ; it’s formed by deleting the first row and first column of . Note the alternating signs in the formula. This formula generalizes to matrices, but is not practical for anything larger than . (What is the runtime of this algorithm?)

The computation of the determinant is not super important. The big idea is that the determinant of is a single number that tells us whether ’s transformation “loses a dimension” or not.

Some useful properties of the determinant are that, for any matrices and ,

(notice the exponent!)

If results from swapping two of ’s columns (or rows), then

The proofs of these properties are beyond the scope of our course. But, it’s worthwhile to think about what they mean in English in the context of linear transformations.

implies that the rows of (which are the columns of ) create the same “volume” as the columns of .

matches our intuition that linear transformations can be composed. is the result of applying to , then to the result.

multiplies each column of by , and so the volume of the resulting object is scaled by .

If results from swapping two of ’s columns (or rows), then $ reflects that swapping two columns reverses the orientation of the transformation, flipping the signed volume from positive to negative (or vice versa) while preserving its magnitude.

Activity 2

Activity 2.1

Find the determinant of the following matrices:

Activity 2.2

Suppose and are both matrices. Is in general?

Activity 2.3

Suppose we multiply ’s 2nd column by 3. What happens to ?

Activity 2.4

If ’s columns are linearly dependent, then find .

Activity 2.5

Find the determinant of (Hint: The answer does not depend on !).

is a orthogonal matrix. If is an orthogonal matrix, then what is ?

Inverting a Transformation¶

Remember, the big goal of this section is to find the inverse of a square matrix .

Since a square matrix corresponds to a linear transformation, we can think of as “reversing” or “undoing” the transformation.

For example, if scales vectors, should scale by the reciprocal, so that applying and then returns the original vector.

The simplest case involves a diagonal matrix, like

To undo the effect of , we can apply the transformation

Many of the transformations we looked at involving matrices are reversible, and hence the corresponding matrices are invertible. If the matrix rotates by , the inverse is a rotation by . If the matrix shears by “dragging to the right”, the inverse is a shear by “dragging to the left”.

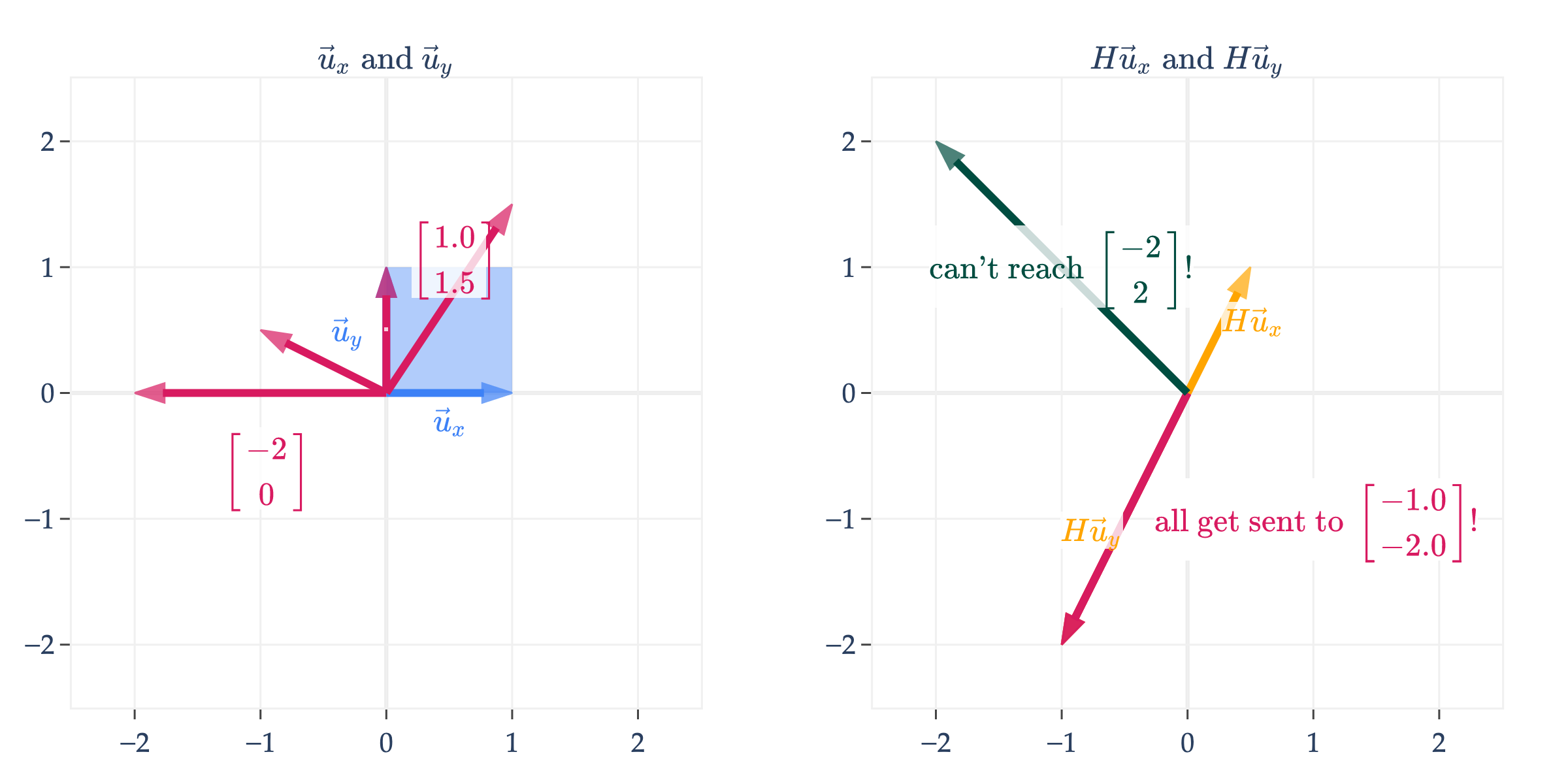

Another way of visualizing whether a transformation is reversible is whether given a vector on the right, there is exactly one corresponding vector on the left. By exactly one, I mean not 0, and not multiple.

Here, we visualize

Given any vector of the form , there is exactly one vector that satisfies this equation.

On the other hand, if we look at

the same does not hold true. Given any vector on the right, there are infinitely many vectors such that . The vectors in pink on the left are all sent to the same vector on the right, .

And, there are no vectors on the left that get sent to on the right. Any vector in that isn’t on the line spanned by is unreachable.

You may recall the following key ideas from discrete math:

A function is invertible if and only if it is both one-to-one (injective) and onto (surjective).

A function is one-to-one if and only if no two inputs get sent to the same output, i.e. implies .

A function is onto if every element of the codomain is an output of the function, i.e. for every , there exists an such that .

The transformation represented by is neither one-to-one (because and get sent to the same point) nor onto (because isn’t mapped to by any vector in ).

In order for a linear transformation to be invertible, it must be both one-to-one and onto, i.e. it must be a bijection. Again, don’t worry if these terms seem foreign: I’ve provided them here to help build connections to other courses if you’ve taken them. If not, the rest of my coverage should still be sufficient.

Inverting a Matrix¶

The big idea I’m trying to get across is that an matrix is invertible if and only if the corresponding linear transformation can be “undone”.

That is, is invertible if and only if given any vector , there is exactly one vector such that . If the visual intuition from earlier didn’t make this clear, here’s another concrete example. Consider

, since ’s first two columns are scalar multiples of one another. Let’s consider two possible ’s, each depicting a different case.

. This is in . The issue with is that there are infinitely many linear combinations of the columns of that equal this .

. This is not in , meaning there is no such that .

But, if ’s columns were linearly independent, they’d span all of , and so would have a unique solution for any we think of.

Definition¶

The properties in the box above are sometimes together called the invertible matrix theorem. This is not an exhaustive list, either, and we’ll see other equivalent properties as time goes on.

Inverse of a Matrix¶

As was the case with the determinant, the general formula for the inverse of a matrix is only convenient for matrices.

You could solve for this formula by hand, by finding scalars , , , and such that

Note that the formula above involves division by . If , then is not invertible, but is just the determinant of ! This should give you a bit more confidence in the equivalence of the statements “ is invertible” and “”.

Let’s test out the formula on some examples. If

then

and indeed both

and

hold.

On the other hand,

is not invertible, since its columns are linearly dependent.

Activity 3

Suppose is an invertible matrix.

Is invertible? If so, what is its inverse?

What is ?

Is invertible? If so, what is its inverse?

Beyond Matrices¶

For matrices larger than , the calculation of the inverse is not as straightforward; there’s no simple formula. In the case, we’d need to find 9 scalars such that

This involves solving a system of 3 equations in 3 unknowns, 3 times – one per column of the identity matrix. One such system is

You can quickly see how this becomes a pain to solve by hand. Instead, we can use one of two strategies:

Using row reduction, also known as Gaussian elimination, which is an efficient method for solving systems of linear equations without needing to write out each equation explicitly. Row reduction can be used to both find the rank and inverse of a matrix, among other things. More traditional linear algebra courses spend a considerable amount of time on this concept, though I’ve intentionally avoided it in this course to instead spend time on conceptual ideas most relevant to machine learning. That said, you’ll get some practice with it in a future homework.

Using a pre-built function in

numpythat does the row reduction for us.

At the end of this section, I give you some advice on how to (and not to) compute the inverse of a matrix in code.

More Examples¶

As we’ve come to expect, let’s work through some examples that illustrate important ideas.

Example: Inverting a Product¶

Suppose and are both invertible matrices. Is invertible? If so, what is its inverse?

Suppose , and , , and are all invertible matrices. What is the inverse of ?

If , , and are all matrices, and is invertible, must and both be invertible?

In general, if is invertible, must and both be invertible?

Solutions

If and are both invertible matrices, then is indeed invertible, with inverse

To confirm, we should check that is both the left inverse of , meaning , and that it is the right inverse of , meaning .

Since we know that , , and are all invertible,

This tells us that is the inverse of , since multiplying by it gets us back to . (For square matrices, it doesn’t matter whether we multiply on the left or right; the inverse is the same and is unique.)

If , , and are all matrices, and is invertible, then and must individually be invertible, too. One way to argue about this is using facts about determinants. Earlier, we learned

If is invertible, , but that must mean too, and so neither nor can be 0 either, meaning and are both invertible.

In general, if is invertible, but we don’t know anything about the shapes of and , it’s not necessarily true that and are individually invertible, because they may not be square! Consider

is an invertible matrix, but neither nor are individually invertible, since neither is square.

Example: Inverting a Sum¶

Suppose and are both invertible matrices. Is invertible? If so, what is its inverse?

Solution

In general, no, is not guaranteed to be invertible.

As a counterexample, suppose is invertible, and that . Then, , but

and a matrix of all 0’s is not invertible (since it’s columns don’t span all of ).

Example: Inverting ¶

Suppose is an matrix. Note that is not square, and so it is not invertible. However, is a square matrix, and so it is possible that it is invertible.

Explain why is invertible if and only if ’s columns are linearly independent.

Solution

Recall that if is , then is .

In Chapter 2.8, we proved that

The only way for to be invertible is if . But, if and only if , which happens when all of ’s columns are linearly independent.

Example: Orthogonal Matrices¶

Recall, an matrix is orthogonal if .

What is the inverse of ? Explain how this relates to the formula for rotation matrices in from earlier,

Solution

Looking at , we see that

since multiplying by on either side gets us back to .

In , if

then

since and . In English, if corresponds to rotating by in the counterclockwise direction, corresponds to rotating by in the counterclockwise direction, i.e. by in the opposite direction.

Example: Householder Reflection¶

Suppose is a unit vector. Let

is called the Householder matrix. reflects across the line in (or plane in , or in general, hyperplane in ) .

For example, suppose . Then,

reflects across the line , i.e. , or , or in more interpretable syntax, .

Show, just using the definition , that is an orthogonal matrix.

Find . (Why does the fact that is orthogonal imply that is invertible?)

Solution

If is orthogonal, then . Let’s start by showing that . Note that .

To be thorough, we’d need to show that , but I’ll save that for you to do.

Note that in the final step, the and cancelled out. But, the -4 came from , while the 4 came from . All of this is to say that if we changed the -2 to some other number in the definition of the Householder matrix, we’d no longer have , because -2 is the only non-zero solution to .

Since is orthogonal, all of ’s columns are orthogonal, implying that they’re linearly independent, meaning that is invertible. Since is orthogonal, , and above, we already showed that

Intuitively, involves reflecting across a line, so involves reflecting back.

Computing the Inverse in Code¶

Recall that if is an matrix and , then

is a system of equations in unknowns. One of the big (conceptual) usages of the inverse is to solve such a system, as I mentioned at the start of this section. If is invertible, then we can solve for by multiplying both sides on the left by :

has encoded within it the solution to , no matter what is. This makes very powerful. But, that power doesn’t come for free: in practice, finding is less efficient and more prone to floating point errors than solving the one system of equations in unknowns directly for the specific we care about.

Solving involves solving just one system of equations in unknowns.

Finding involves solving a system of equations in unknowns, times! Each system has the same coefficient matrix but a different right-hand side , corresponding to the columns of the identity matrix (which are the standard basis vectors in ).

The more floating point operations we need to do, the more error is introduced into the final results.

Let’s run an experiment. Suppose we’d like to find the solution to the system

This corresponds to , with

Here, we can eyeball the solution as being . How close to this “true” do each of the following techniques get?

Option 1: Using np.linalg.inv

As we saw at the start of the section, np.linalg.inv(A) finds the inverse of A, assuming it exists. The following cell finds the solution to by first computing , and then .

# Prevents unnecessary rounding.

np.set_printoptions(precision=16, suppress=True)

A = np.array([[1, 1],

[1, 1.000000001]])

b = np.array([[7],

[7.000000004]])

x_inv = np.linalg.inv(A) @ b

x_invarray([[2.9999998807907104],

[4.0000001192092896]])Option 2: Using np.linalg.solve

The following cell solves the same problem as the previous cell, but rather than asking for the inverse of , it asks for the solution to , which doesn’t inherently require inverting .

x_solve = np.linalg.solve(A, b)

x_solvearray([[3.],

[4.]])This small example already illustrates the big idea: inverting can introduce more numerical error than is necessary. If we cast the problem more abstractly, consider the matrix

where is a small constant, e.g. in the example above. The inverse of is

The closer is to 0, the larger becomes, making any floating point errors all the more costly.